Quickstart

A Bayesian Belief Network (BBN) is defined as a pair \((D, P)\) where

\(D\) is a directed acyclic graph (DAG), and

\(P\) is a joint distribution over a set of variables corresponding to the nodes in the DAG.

Creating a reasoning model involves defining \(D\) and \(P\). Assuming that the BBN is a causal BBN, we can use the BBN to perform different types of causal queries.

Associational: queries that estimate conditional relationships.

Interventional: queries that estimate causal effects.

Counterfactual: queries that estimate outcomes based on observed events and hypothetical actions.

In this notebook, we show how to quickly use py-scm to create a Gaussian BBN and conduct different types of causal inferences.

Creating a model

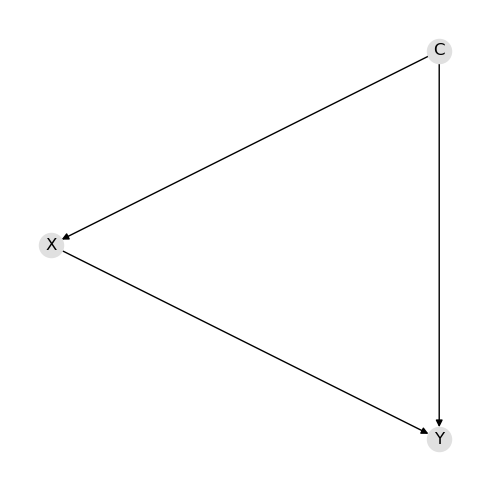

Create the structure, DAG

Creating the DAG means to define the nodes and directed edges.

[1]:

d = {

'nodes': ['C', 'X', 'Y'],

'edges': [

('C', 'X'),

('C', 'Y'),

('X', 'Y')

]

}

[2]:

from pyscm.serde import dict_to_graph

import networkx as nx

import matplotlib.pyplot as plt

fig, ax = plt.subplots(figsize=(5, 5))

g = dict_to_graph(d)

pos = nx.nx_agraph.graphviz_layout(g, prog='dot')

nx.draw(g, pos=pos, with_labels=True, node_color='#e0e0e0')

fig.tight_layout()

Create the parameters

Creating the parameters means to define the means and covariance matrix. The means and covariance below were estimated from the following normal distributions.

\(C \sim \mathcal{N}(1, 1)\)

\(X \sim \mathcal{N}(2 + 3 C, 1)\)

\(Y \sim \mathcal{N}(0.5 + 2.5 C + 1.5 X, 1)\)

[3]:

p = {

'v': ['C', 'X', 'Y'],

'm': [1.00172341, 4.99599921, 10.5032959],

'S': [

[ 0.99070024, 2.97994442, 6.95690224],

[ 2.97994442, 9.97338239, 22.44685389],

[ 6.95690224, 22.44685389, 52.12803651]

]

}

Create the model

Finally, we can create the reasoning model once we define the DAG and parameters.

[4]:

from pyscm.reasoning import create_reasoning_model

model = create_reasoning_model(d, p)

Associational query

You are able to conduct associational query with and without evidence.

Query without evidence

Associational query involves invoking the pquery() method. A tuple is returned where the first element is the means and the second element is the covariance matrix. The means and covariance matrix are the parameters of the multivariate normal distribution.

[5]:

q = model.pquery()

[6]:

q[0]

[6]:

C 1.001723

X 4.995999

Y 10.503296

dtype: float64

[7]:

q[1]

[7]:

| C | X | Y | |

|---|---|---|---|

| C | 0.990700 | 2.979944 | 6.956902 |

| X | 2.979944 | 9.973382 | 22.446854 |

| Y | 6.956902 | 22.446854 | 52.128037 |

Query with evidence

If you have evidence, pass in a dictionary of the observed evidence to pquery().

[8]:

q = model.pquery({'X': 2.0})

[9]:

q[0]

[9]:

C 0.106550

X 2.000000

Y 3.760272

dtype: float64

[10]:

q[1]

[10]:

| C | X | Y | |

|---|---|---|---|

| C | 0.100323 | 2.979944 | 0.250012 |

| X | 2.979944 | 9.973382 | 22.446854 |

| Y | 0.250012 | 22.446854 | 1.607438 |

Interventional query

Interventional query involves graph surgery where the edges between the parents and the variable we are manipulating are removed. Interventional query is conducted by invoking the iquery() method. Below, the do operation is applied to \(C\) (e.g. do(C)). Like the pquery() method, the iquery() method also returns a tuple where the first element is the means and the second element is the covariance matrix.

[11]:

model.iquery('Y', {'X': 2.0})

[11]:

mean 6.036492

std 2.389106

dtype: float64

Compare queries

Let’s compare the results of the associational and interventional queries by using the resulting parameters to sample data.

[15]:

import pandas as pd

pd.Series({

'marginal': model.pquery()[0].loc['Y'],

'conditional': model.pquery({'X': 2.0})[0].loc['Y'],

'causal': model.iquery('Y', {'X': 2.0}).loc['mean']

})

[15]:

marginal 10.503296

conditional 3.760272

causal 6.036492

dtype: float64

Counterfactual

Counterfactual queries are conducted using cquery(). You will need to pass in the factual evidence and the counterfactual manipulations. Below, the factual evidence, f, is what has already happened; all variables must be observed. The counterfactual manipulations, cf, are what we want to hypothesize to have happened; notice this is a list of hypothetical situation. What we are asking, in plain language, is the following.

Given we have observed, C=0.945536, X=4.970491, Y=10.542022, what would have happened to Y if

X=1?

X=2?

X=3?

C=2 and X=3?

[16]:

f = {

'C': 0.945536,

'X': 4.970491,

'Y': 10.542022

}

cf = [

{'X': 1},

{'X': 2},

{'X': 3},

{'C': 2, 'X': 3}

]

q = model.cquery('Y', f, cf)

[17]:

q

[17]:

| C | X | factual | counterfactual | |

|---|---|---|---|---|

| 0 | 0.945536 | 1 | 10.542022 | 7.923450 |

| 1 | 0.945536 | 2 | 10.542022 | 8.582958 |

| 2 | 0.945536 | 3 | 10.542022 | 9.242467 |

| 3 | 2.000000 | 3 | 10.542022 | 9.529814 |

Data sampling

To sample data from the model, invoke the samples() method.

[18]:

sample_df = model.samples()

sample_df.shape

[18]:

(1000, 3)

[19]:

sample_df.head()

[19]:

| C | X | Y | |

|---|---|---|---|

| 0 | 1.664294 | 6.427497 | 14.437620 |

| 1 | 3.466292 | 12.487412 | 26.872974 |

| 2 | -0.766829 | 0.356344 | -1.719488 |

| 3 | 1.264512 | 5.897438 | 12.794257 |

| 4 | 1.425076 | 6.739629 | 13.730448 |

[20]:

sample_df.mean()

[20]:

C 1.032158

X 5.040711

Y 10.581092

dtype: float64

[21]:

sample_df.cov()

[21]:

| C | X | Y | |

|---|---|---|---|

| C | 1.004426 | 3.016373 | 7.073488 |

| X | 3.016373 | 10.096769 | 22.829570 |

| Y | 7.073488 | 22.829570 | 53.183407 |

Serde

Saving and loading the model is easy.

Serialization

To persist the model, use the model_to_dict() method.

[22]:

import json

import tempfile

from pyscm.serde import model_to_dict

data1 = model_to_dict(model)

with tempfile.NamedTemporaryFile(mode='w', delete=False) as fp:

json.dump(data1, fp)

file_path = fp.name

print(f'{file_path=}')

file_path='/var/folders/vt/g8zbc68n2nj8dkk85n8b19440000gn/T/tmppwpb79kp'

Deserialization

To load the model, use the dict_to_model() method.

[23]:

from pyscm.serde import dict_to_model

with open(file_path, 'r') as fp:

data2 = json.load(fp)

model2 = dict_to_model(data2)

[24]:

model2

[24]:

ReasoningModel[H=[C,X,Y], M=[1.002,4.996,10.503], C=[[0.991,2.980,6.957]|[2.980,9.973,22.447]|[6.957,22.447,52.128]]]